How many times have you felt frustrated at Tableau Desktop or Server because your data source extract didn’t work or even worse it stopped working all of a sudden? Has the thought of going back to a live database or even going back to what you used to do before Tableau ever cross your mind? Well, STOP RIGHT NOW! In this post, I’ll share with you a very interesting scenario that even had Tableau Support stumped. Just for clarity, this is for database connections only, but similar debugging strategy can be applied to any data source.

Scenario

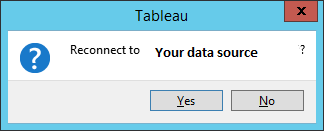

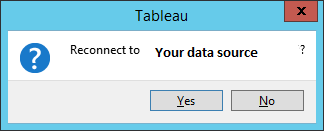

I was building out a new data source that connects to a Postgres database. The data source has multiple inner joins but nothing complex. Following proper Tableau guidelines, this is not a custom query. With a live connection, I had no issues rendering a viz. But, once I attempted to create an extract: BOOM! I would get an error. The error read: “reconnect to database?”.

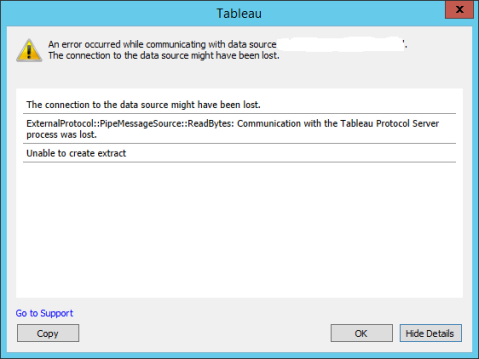

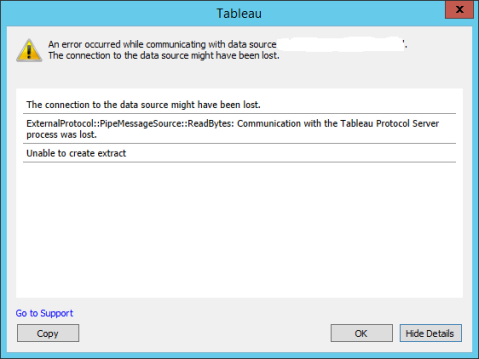

If I hit No, then I would get the full error:

Solution

Triage

When I get an error on Tableau Desktop regarding extracts, the first thing I do is look at the logs. They are located under your Tableau repository (click on File and then Repository Location to see where it exists). Look through the logs for a few keywords: error, extract, data source name. If you can reproduce the issue then clean out your log files, reproduce the issue, and then search through the log files again. This will take away any issues with looking at old errors that are not relevant to your issue.

In my case, all I got was that the connection to the Tableau Protocol Server process was lost and nothing else. What do you do in this case? Who do you call? Well – if you can query the database and have no issues there such as syntax, database connection, or authentication, then submit a Tableau Support Case. That is what I did. One important thing here – keep a copy of your Tableau Desktop log files. They will help Tableau Support.

Tableau Support

Depending on the severity of your issue and the backlog, you may have to wait a day or two before you get a response. Once I got a tech assigned, we did a WebEx and were able to reproduce the issue. Re-collected the log files and the case was moved to Level 2. We had a second WebEx and gathered more stats but again we couldn’t figure out the issue. Let me share what additional logs we generated so you have a better idea of what goes on.

Tableau Desktop in Debug Mode

Did you know Tableau Desktop can run in Debug Mode? It just means it logs more information. Now, I probably should have done that at the beginning to see if it got me anywhere. But, will definitely do it next time. If you are curious, before you open Tableau Desktop, open a command prompt or terminal. Change your directory to the location of your Tableau Desktop exe file and run this command:

tableau.exe -DLogLevel=debug

Generate and ODBC Driver Trace

Since the issue was with Postgres, we wanted to make sure that there wasn’t an issue with the database driver. The driver is basically how Tableau Desktop and Server connect to your database. There are many database systems that Tableau supports and for most (with the exception of Postgres because Tableau ships with it), you will need the database driver installed to use it.

The Tableau Support tech asked me generate the ODBC driver trace before we started Tableau Desktop and re-started the extract. Why, you may ask. Well, an ODBC driver trace will log all the columns that are being requested and their data types. If there are any errors, they will be logged there as well.

To start an ODBC Driver trace, open ODBC Administrator tool and click on Tracing tab. Choose a location to save the trace file and then start the trace. Open Tableau Desktop, reproduce your issue and then stop the trace from the same ODBC Administrator tool.

We collected all this information to pass on to Engineering to figure out what was going on. For your own curiosity, take a look at these files to see what information you can gather and maybe it will take you closer to solving your issue.

Intermission

While I was waiting to hear back from Support, I kept trying to think of why this data source was having an issue. There were no database changes, there was no change of permissions, connections, Postgres driver, etc. In fact, we had other data sources on Tableau that used the Postgres driver and had no issues. We were able to do simple joins with other tables, so what was different?

Mystery Solved!

If it’s not in the database schema well maybe it’s in the DATA ITSELF?! That’s what I told myself. That’s exactly what it was. Postgres defaults to client character encoding of UTF-8. That doesn’t work when your data has special characters in them. There was one specific column that had special characters. Since I knew the data behind it, I knew which one it was.

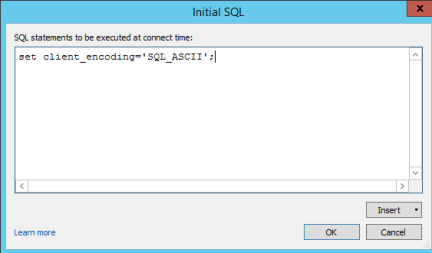

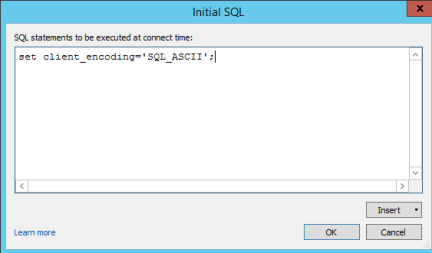

Now that I knew what my problem was, how did I fix it? I could create a TDC (Tableau Datasource Customizations) file for that. Link below for more details. But, what I did instead was to set an “Initial SQL” statement. In Tableau, you could set an initial sql statement which Tableau will execute prior to running your data source query. In Postgres, you can set the character encoding. I added a simple line:

SET CLIENT_ENCODING=SQL_ASCII

TA-DA! My extracts worked and the issue was resolved. Next time you have an issue with your extracts, think about the data you are using. Does it have special characters? They may be the cause of your problem.

Resources

Postgres Character Encoding: https://www.postgresql.org/docs/current/static/multibyte.html

Tableau Datasource Customization Files: https://onlinehelp.tableau.com/current/pro/desktop/en-us/odbc_customize.html#global_tdc

ODBC Trace: https://docs.microsoft.com/en-us/sql/odbc/admin/setting-tracing-options

Do you know if your Tableau Server is healthy? When was the last time you checked with your “Tableau Doctor” to make sure your TS had a clean bill of health?

Do you know if your Tableau Server is healthy? When was the last time you checked with your “Tableau Doctor” to make sure your TS had a clean bill of health?

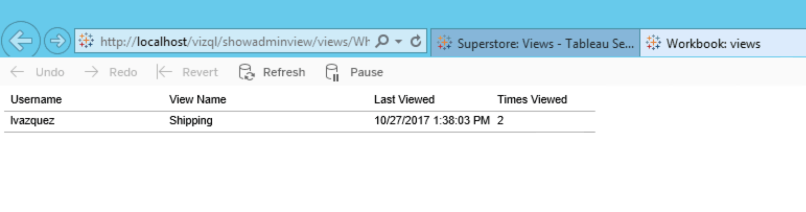

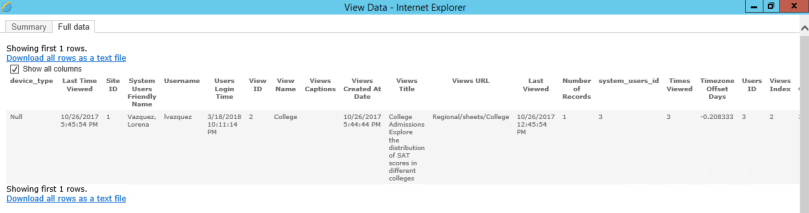

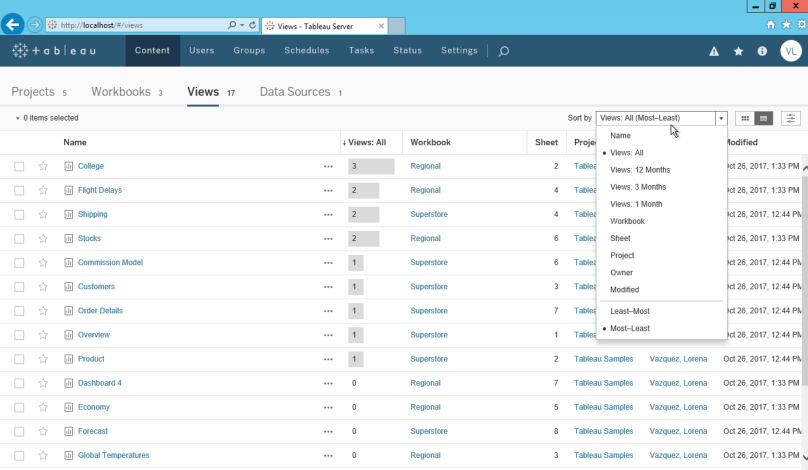

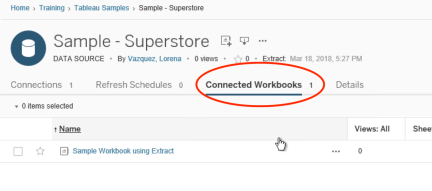

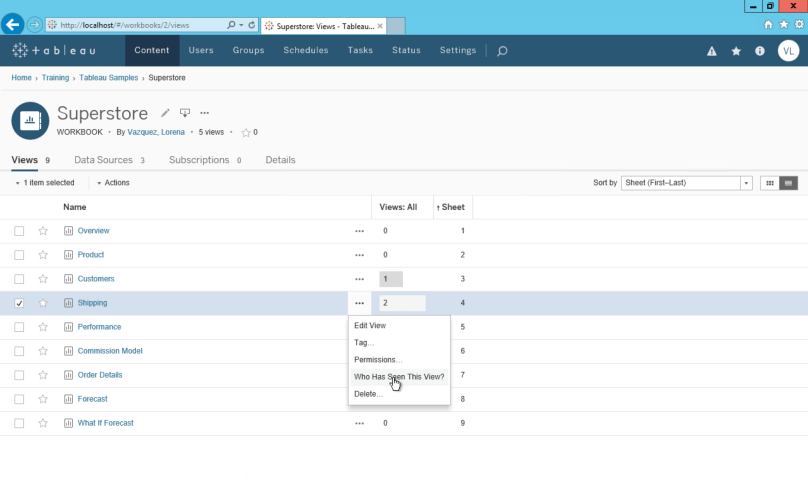

This provides you with access to the underlying data just like you would in any other workbook. Look at this progression below.

This provides you with access to the underlying data just like you would in any other workbook. Look at this progression below.